Publications

This is a collection of all peer-reviewed publications which I (co-)authored, mostly with ACM and a few with IEEE, Springer etc. I’ve taken care that all papers hosted here are as close as possible to the “official copy of record” (or however publishers want to call this); from approx. 2020 on, all publications are also open-access as well, so the copy hosted here is 100% identical to the one from the publisher.

2024

Dr. Convenience Love or: How I Learned to Stop Worrying and Love my Voice Assistant

NordiCHI '24: Proceedings of the 13th Nordic Conference on Human-Computer Interaction (2024-10-13)

Manual, Hybrid, and Automatic Privacy Covers for Smart Home Cameras

DIS '24: Proceedings of the 2024 ACM Conference on Designing Interactive Systems Honourable Mention Award (2024-07-01)

Experimenting with new review methods, open practices, and interactive publications in HCI

Extended Abstracts of the 2024 CHI Conference on Human Factors in Computing Systems (2024-05-11)

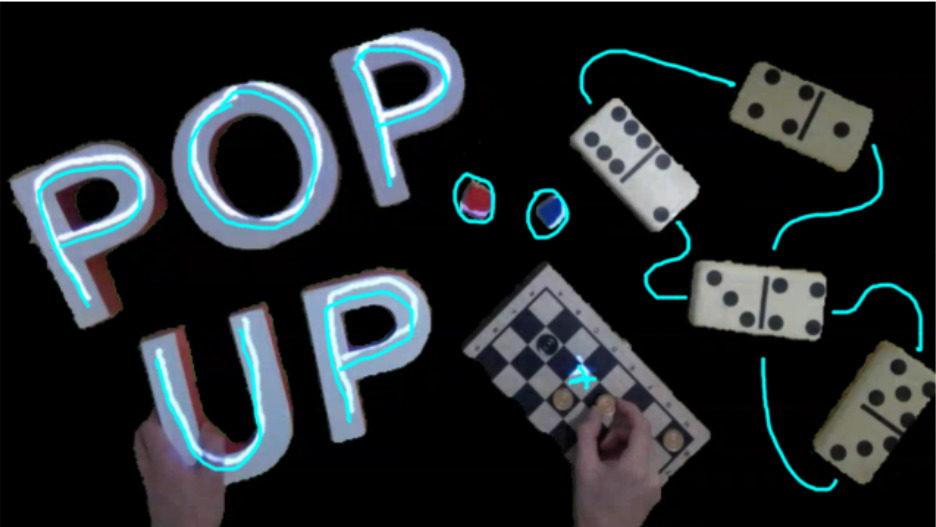

TableCanvas: Remote Open-Ended Play in Physical-Digital Environments

TEI '24: Proceedings of the Eighteenth International Conference on Tangible, Embedded, and Embodied Interaction. (2024-02-11)

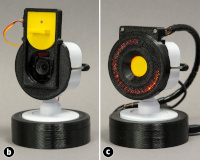

LensLeech: On-Lens Interaction for Arbitrary Camera Devices

TEI '24: Proceedings of the Eighteenth International Conference on Tangible, Embedded, and Embodied Interaction. (2024-02-11)

2023

SurfaceCast: Ubiquitous, Cross-Device Surface Sharing

Proceedings of the ACM on Human-Computer Interaction (2023-11-01)

The Journal of Visualization and Interaction

The Journal of Visualization and Interaction (2023-04-19)

2022

Frankie: Exploring How Self-Tracking Technologies Can Go from Data-Centred to Human-Centred

MUM '22: Proceedings of the 21st International Conference on Mobile and Ubiquitous Multimedia (2022-11-27)

HeadsUp: Mobile Collision Warnings through Ultrasound Doppler Sensing

MUM '22: Proceedings of the 21st International Conference on Mobile and Ubiquitous Multimedia (2022-11-27)

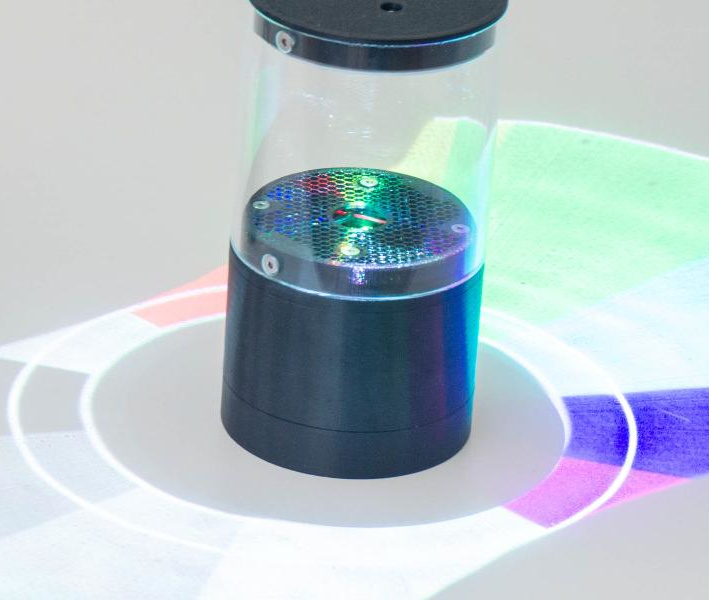

MirrorForge: Rapid Prototyping of Complex Mirrors for Camera and Projector Systems

TEI '22: Proceedings of the Sixteenth International Conference on Tangible, Embedded, and Embodied Interaction. Best Paper Award (2022-02-14)

2021

Rainmaker: A Tangible Work-Companion for the Personal Office Space

MobileHCI '21: Proceedings of the 23rd International Conference on Mobile Human-Computer Interaction (2021-09-27)

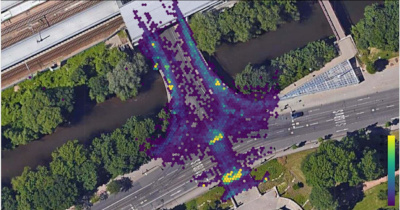

DesPat: Smartphone-Based Object Detection for Citizen Science and Urban Surveys

i-com - Journal of Interactive Media - Special Issue on E-Government and Smart Cities (2021-08-26)

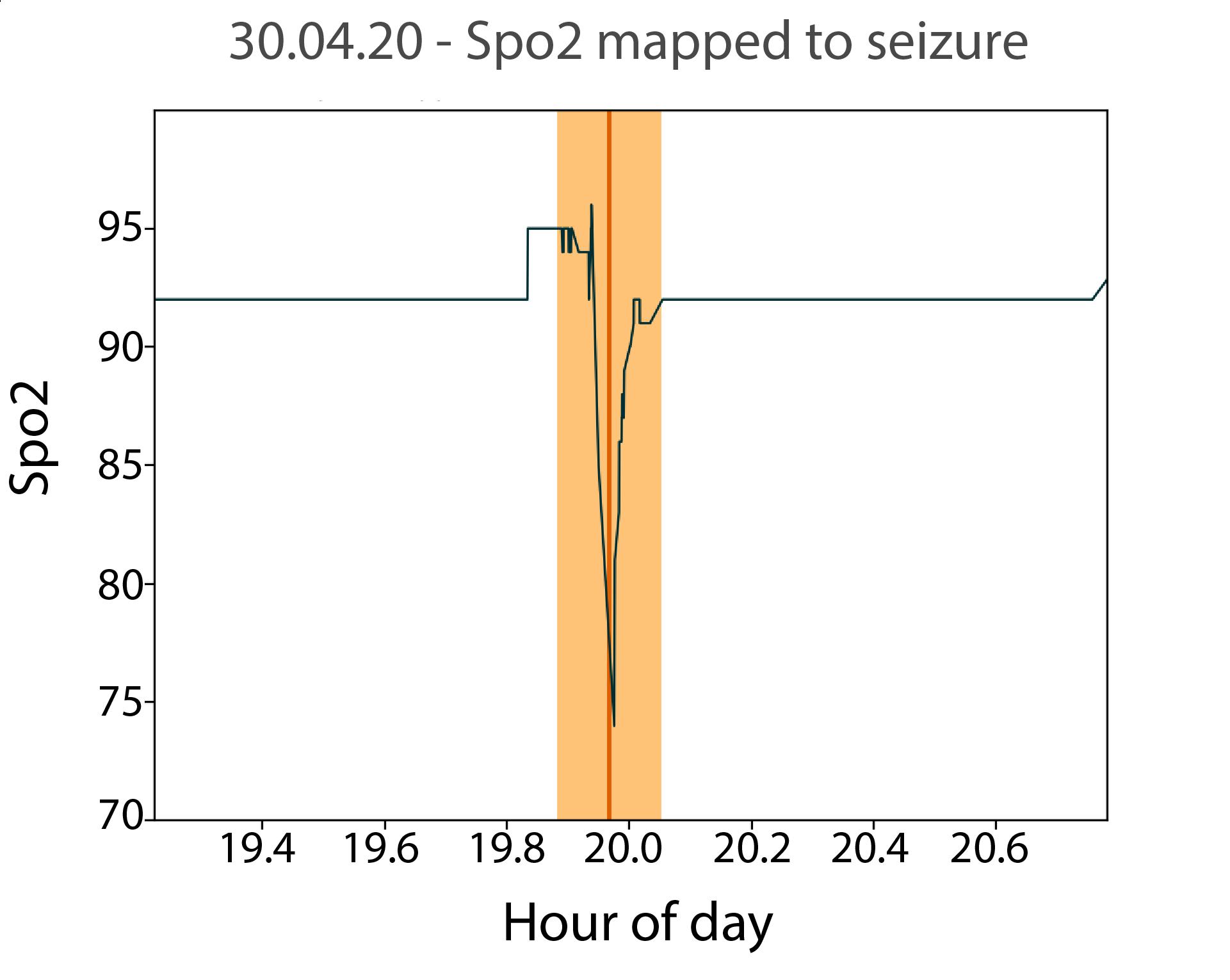

Exploring Epileptic Seizure Detection with Commercial Smartwatches

Proceedings of the 2021 IEEE International Conference on Pervasive Computing and Communications - Workshops and other Affiliated Events (2021-03-26)

TempoWatch: a Wearable Music Control Interface for Dance Instructors

TEI '21: Proceedings of the Fifteenth International Conference on Tangible, Embedded, and Embodied Interaction (2021-02-14)

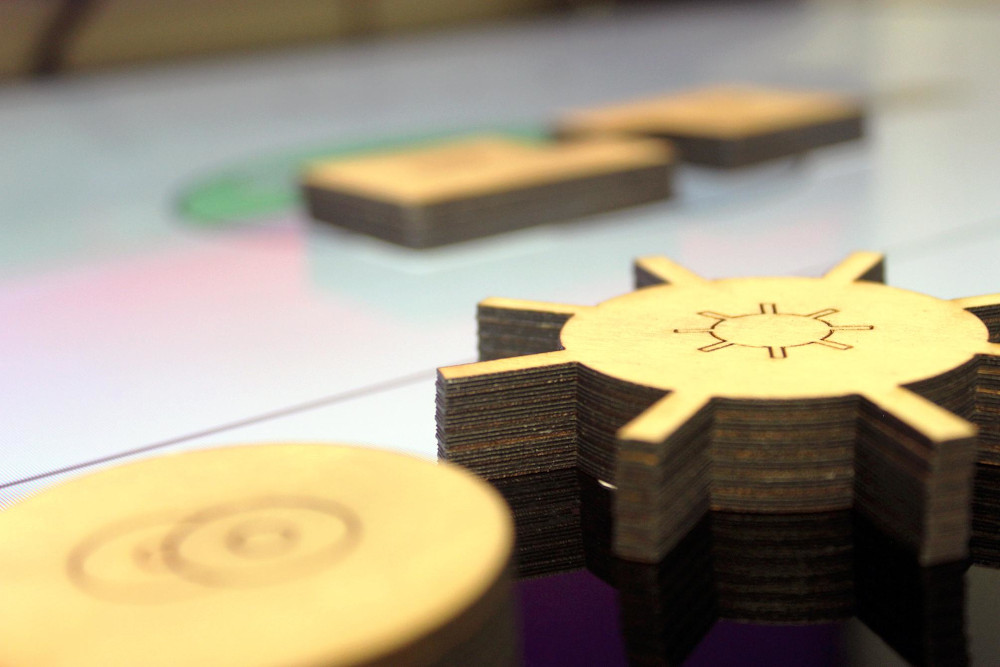

Seedmarkers: Embeddable Markers for Physical Objects

TEI '21: Proceedings of the Fifteenth International Conference on Tangible, Embedded, and Embodied Interaction (2021-02-14)

2020

A dynamic representation of physical exercises on inflatable membranes: Making walking fun again!

ETIS '20: Proceedings of the 4th European Tangible Interaction Studio (2020-11-16)

Cloudless Skies? Decentralizing Mobile Interaction

MobileHCI '20: Proceedings of the 22nd International Conference on Human-Computer Interaction with Mobile Devices and Services (2020-10-05)

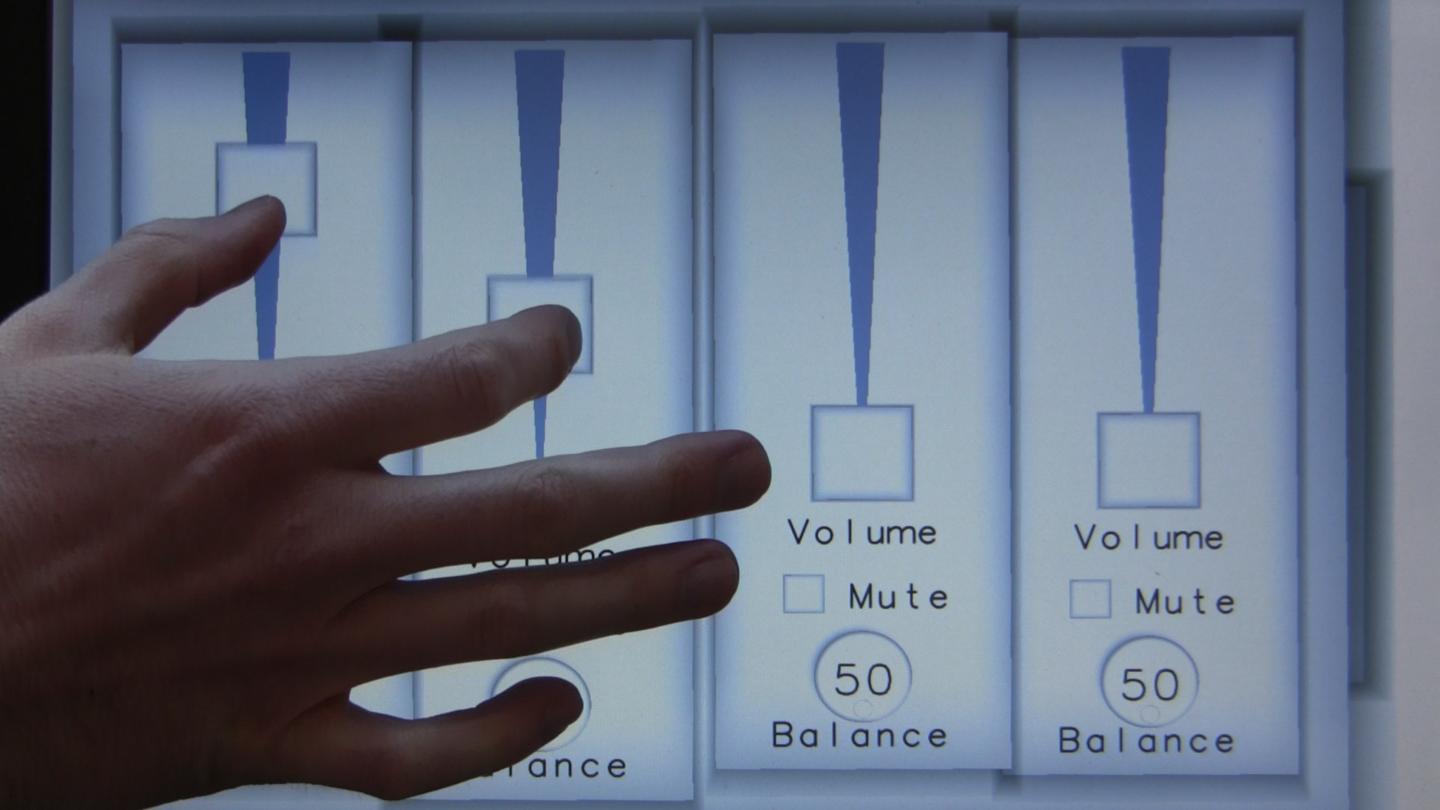

Short-Contact Touch-Manipulation of Scatterplot Matrices on Wall Displays

Computer Graphics Forum, Volume 39, 2020 (2020-07-18)

Tabletop teleporter: evaluating the immersiveness of remote board gaming

Proceedings of the 9th ACM International Symposium on Pervasive Displays (2020-06-04)

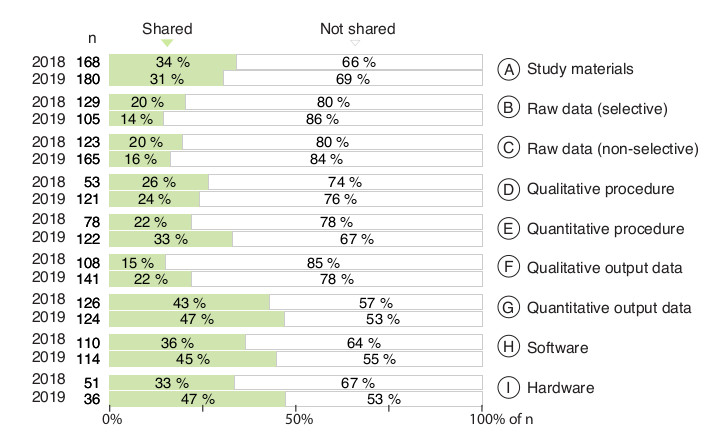

Transparency of CHI Research Artifacts: Results of a Self-Reported Survey

Proceedings of the ACM CHI 2020 Conference on Human Factors in Computing Systems. Best Paper Award (top 1%) (2020-04-25)

2019

BinarySwipes: Fast List Search on Small Touchscreens

MuC '19 - Proceedings of Mensch & Computer (2019-09-08)

SecuriCast: Zero-Touch Two-Factor Authentication using WebBluetooth

EICS '19 - Proceedings of the ACM SIGCHI Symposium on Engineering Interactive Computing Systems (2019-06-18)

2018

SurfaceStreams: A Content-Agnostic Streaming Toolkit for Interactive Surfaces

ACM UIST 2018 Adjunct Proceedings (2018-10-04)

Companion - A Software Toolkit for Digitally Aided Pen-and-Paper Tabletop Roleplaying

ACM UIST 2018 Adjunct Proceedings (2018-10-04)

The TUIO 2.0 Protocol: An Abstraction Framework for Tangible Interactive Surfaces

Proceedings of the ACM on Human-Computer Interaction - EICS (2018-06-01)

Open Source, Open Science, and the Replication Crisis in HCI

CHI '18 Extended Abstracts on Human Factors in Computing Systems (2018-04-21)

2017

TouchScope: A Hybrid Multitouch Oscilloscope Interface

Proceedings of the 19th ACM International Conference on Multimodal Interaction (ICMI '17) (2017-11-13)

ShakeCast: using handshake detection for automated, setup-free exchange of contact data

Proceedings of the 19th International Conference on Human-Computer Interaction with Mobile Devices and Services (MobileHCI '17) (2017-09-04)

Designing Interactive Advertisements for Public Displays

Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems (2017-05-06)

2016

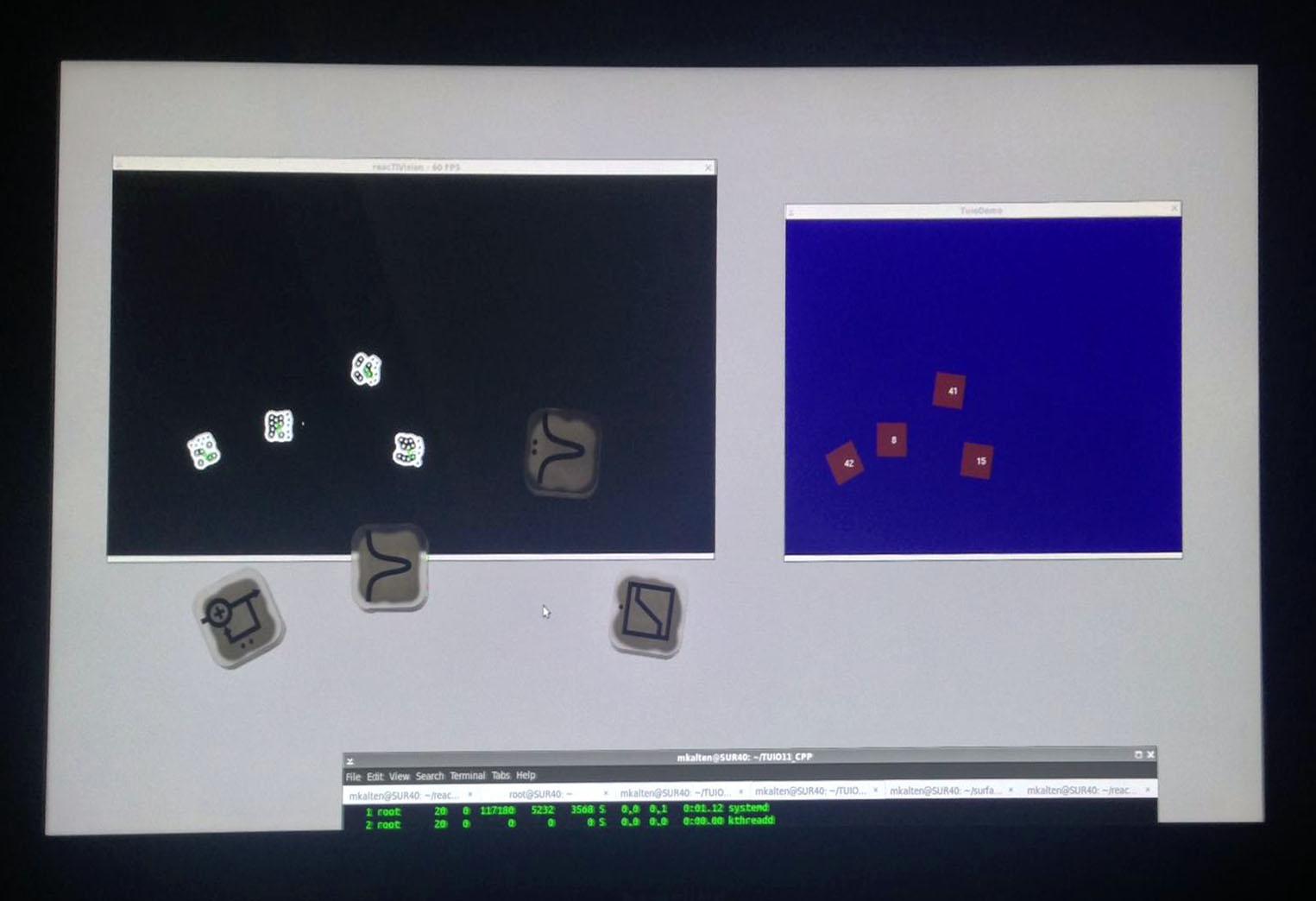

SUR40 Linux: Reanimating an Obsolete Tangible Interaction Platform

Proceedings of the 2016 ACM on Interactive Surfaces and Spaces (ACM ISS '16) (2016-11-06)

The Massive Mobile Multiuser Framework: Enabling Ad-hoc Realtime Interaction on Public Displays with Mobile Devices

Proceedings of the 5th International Symposium on Pervasive Displays (PerDis'16) (2016-06-20)

MMM Ball: Showcasing the Massive Mobile Multiuser Framework

Proceedings of the 2016 CHI Conference Extended Abstracts on Human Factors in Computing Systems (2016-05-07)

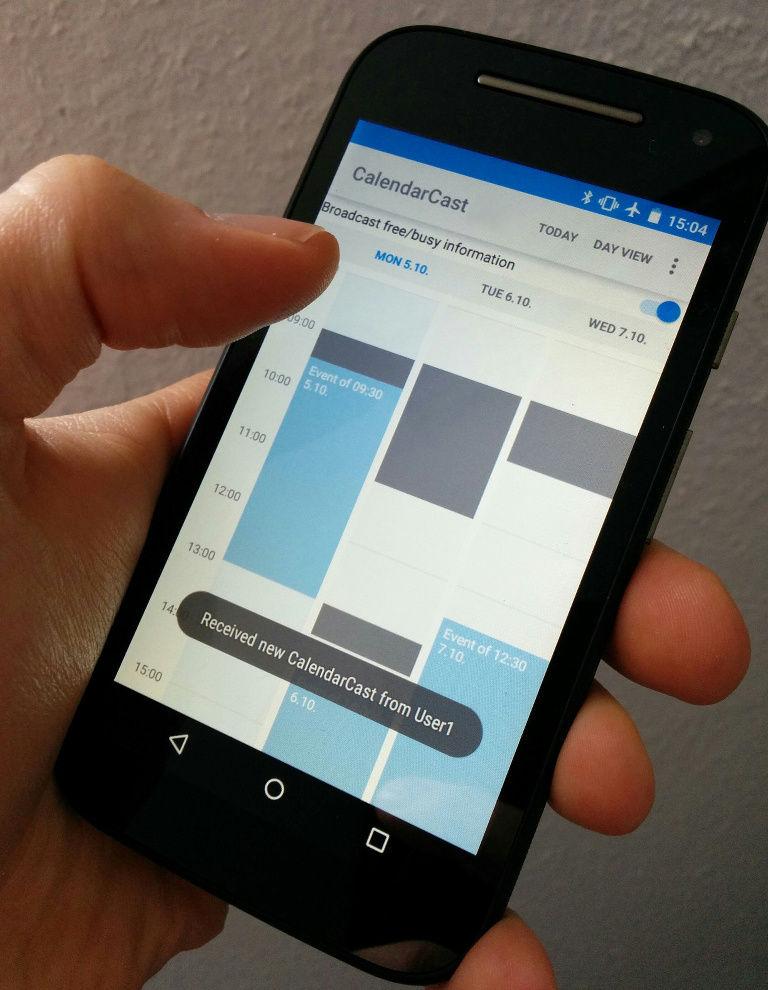

CalendarCast: Setup-Free, Privacy-Preserving, Localized Sharing of Appointment Data

Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems (2016-05-07)

2015

pART bench: a Hybrid Search Tool for Floor Plans in Architecture

Proceedings of the 2015 International Conference on Interactive Tabletops & Surfaces (ITS '15), Funchal, Madeira (2015-11-15)

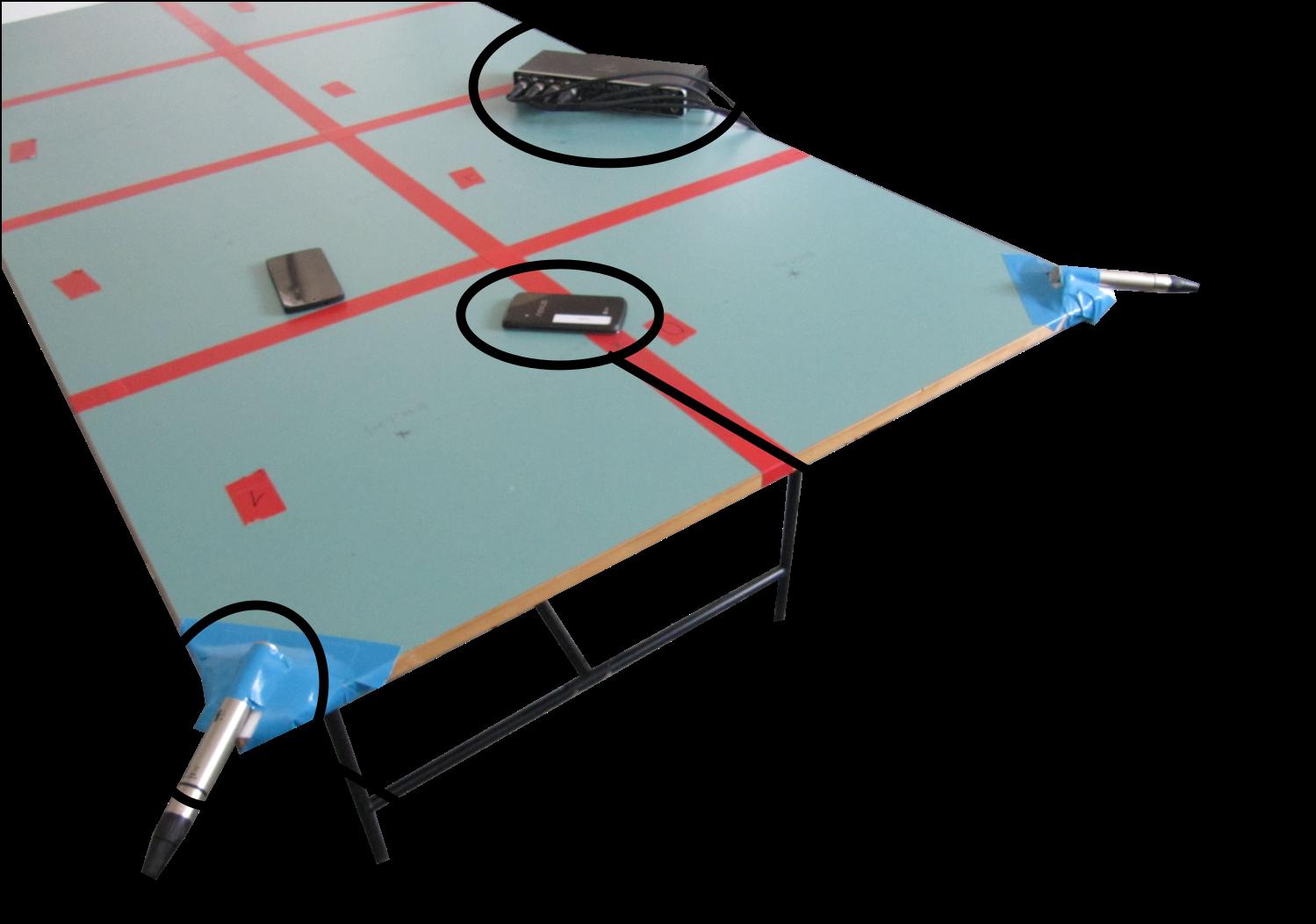

MoBat: Sound-Based Localization of Multiple Mobile Devices on Everyday Surfaces

Proceedings of the 2015 International Conference on Interactive Tabletops & Surfaces (ITS '15), Funchal, Madeira (2015-11-15)

Shoe me the Way: A Shoe-Based Tactile Interface for Eyes-Free Urban Navigation

Proceedings of the 17th International Conference on Human-Computer Interaction with Mobile Devices and Services (MobileHCI 2015), Copenhagen, Denmark (2015-08-24)

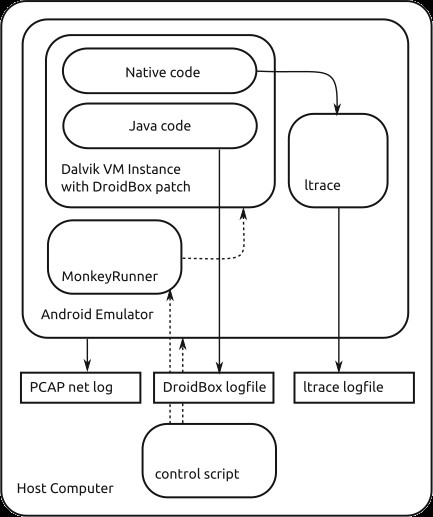

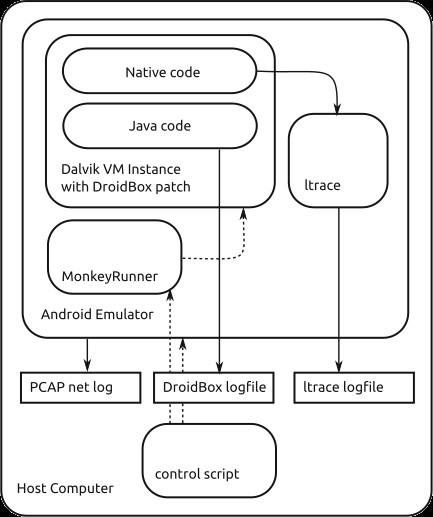

Mobile-Sandbox: combining static and dynamic analysis with machine-learning techniques

International Journal of Information Security, Springer, April 2015 (2015-04-01)

2014

TUIO Hackathon

Proceedings of the 9th ACM International Conference on Interactive Tabletops and Surfaces (ITS '14) (2014-11-16)

The Interactive Dining Table, or: Pass the Weather Widget, Please

Proceedings of the 9th ACM International Conference on Interactive Tabletops and Surfaces (ITS '14) (2014-11-16)

Engineering Gestures for Multimodal User Interfaces

Engineering Gestures for Multimodal Interfaces - Workshop at EICS 2014, Rome, Italy (2014-06-17)

Phone proxies: effortless content sharing between smartphones and interactive surfaces

EICS 2014 - ACM Symposium on Engineering Interactive Computing Systems (2014-06-17)

EyeDE: gaze-enhanced software development environments

CHI '14 Extended Abstracts on Human Factors in Computing Systems (2014-04-26)

CHI 2039: speculative research visions

CHI '14 Extended Abstracts on Human Factors in Computing Systems (2014-04-26)

2013

RefactorPad: Editing Source Code on Touchscreens

Proceedings of the 5th ACM SIGCHI symposium on Engineering interactive computing systems (EICS '13) (2013-06-24)

Exploring the Benefits of Fingernail Displays

Extended Abstracts of CHI 2013, Paris, France. (2013-04-27)

The Interactive Dining Table

CHI 2013 Workshop on Blended Interaction (Blend13), Paris, France. (2013-04-27)

Mobile-Sandbox: having a deeper look into Android applications

Proceedings of ACM SAC 2013, Coimbra, Portugal. (2013-03-18)

2012

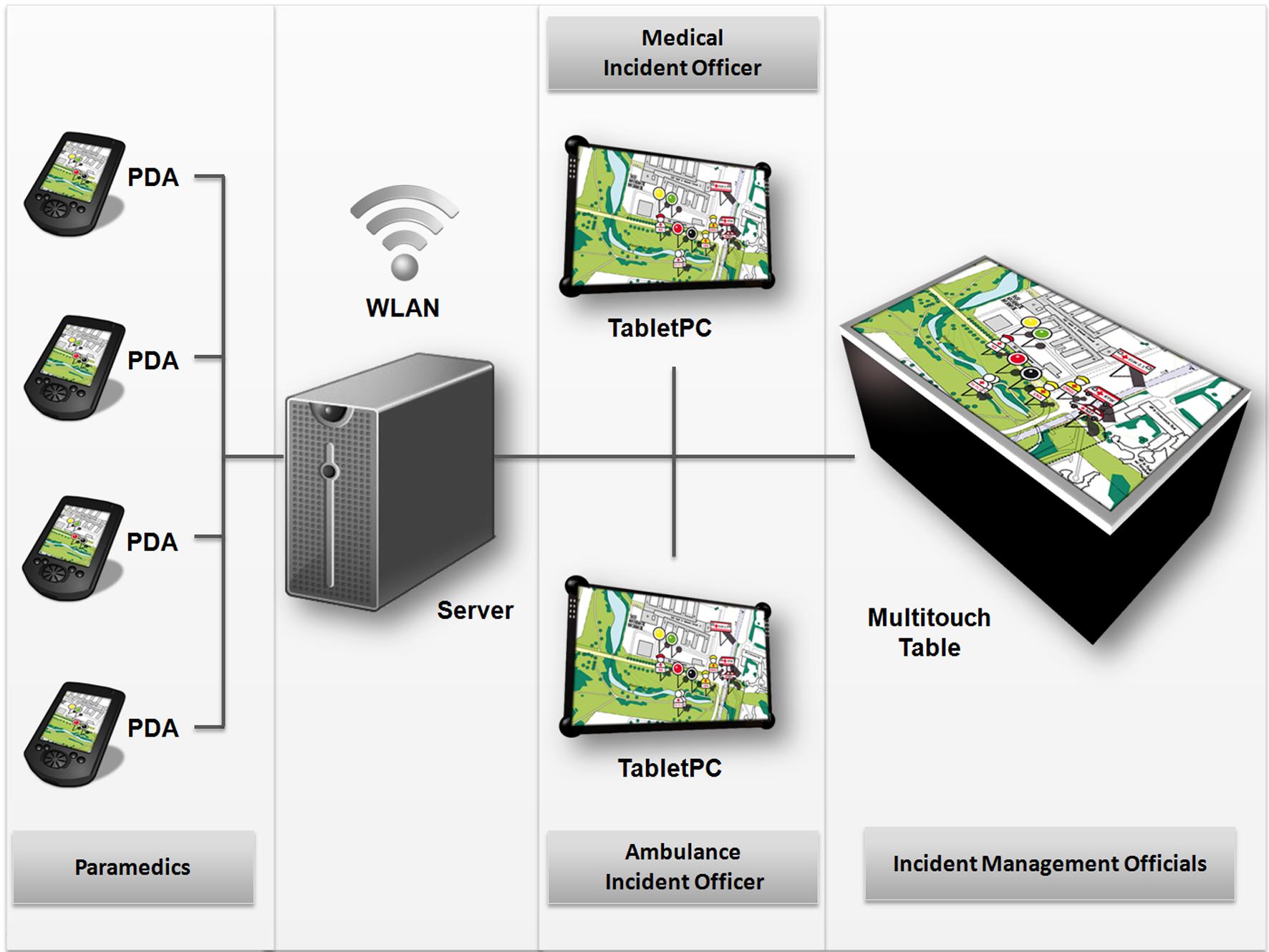

Creating a common operation picture in realtime with user-centered interfaces for mass casualty incidents

4th international workshop for Situation recognition and medical data analysis in Pervasive Health environments (PervaSense), PervasiveHealth 2012 (2012-07-03)

2011

Automatic Configuration of Pervasive Sensor Networks for Augmented Reality

IEEE Pervasive Computing, Volume 10, Number 3, July-Sept. 2011, pp. 68-79. ()

An Approach to Collaborative Music Composition

International Conference on New Interfaces for Musical Expression (NIME 2011), May 30th, 2011, Oslo, Norway. ()

Multi-Touch Table as Conventional Input Device

HCI International Extended Abstracts, July 9 - 14, Orlando, Florida, USA, pp. 237-241. ()

Exploring Multi-touch Gestures for Map Interaction in Mass Casualty Incidents

3. Workshop zur IT-Unterstützung von Rettungskräften im Rahmen der GI-Jahrestagung Informatik 2011 ()

2010

Management of Tracking for Mixed and Augmented Reality Systems

The Engineering of Mixed Reality Systems, E. Dubois, P. Gray, L. Nigay (eds.), Human-Computer Interaction Series, Springer Verlag, 2010. ()

A Tabletop Interface for Generic Creativity Techniques

International Conference on Interfaces and Human Computer Interaction (IHCI 2010), July 26, 2011, Freiburg, Germany. ()

An LED-based Multitouch Sensor for LCD Screens

TEI 2010, January 25 - 27, Cambridge, MA, USA, pp. 227-230. ()

Features, Regions, Gestures: Components of a Generic Gesture Recognition Engine

Workshop on Engineering Patterns for Multitouch Interfaces, June 20, 2010, Berlin, Germany. ()

Towards a Unified Gesture Description Language

13th International Conference on Humans and Computers (HC 2010), December 8, 2010, Düsseldorf, Germany, pp. 177-182. ()

Beyond Pinch-to-Zoom: Exploring Alternative Multi-touch Gestures for Map Interaction

Technischer Bericht: TUM-I1006 ()

2009

Low Cost 3D Rotational Input Devices: the stationary Spinball and the mobile Soap3D

The 11th Symposium on Virtual and Augmented Reality (SVR), Porto Alegre, Brazil, May 25 - 28, 2009. Best short paper ()

Development and evaluation of a virtual reality patient simulation (VRPS)

The 17-th International Conference in Central Europe on Computer Graphics, Visualization and Computer Vision (WSCG'09) ()

Supporting Casual Interactions between Board Games on Public Tabletop Displays and Mobile Devices

Personal and Ubiquitous Computing, Special Issue on Public and Private Displays, Springer Verlag, 2009, pp. 609-617. ()

Tangible Information Displays

Dissertation an der Fakultät für Informatik, Technische Universität München, Dez. 2009 ()

A Short Guide to Modulated Light

TEI 2009, February 16 - 18, Cambridge, United Kingdom, pp. 393-396. ()

Inverted FTIR: Easy Multitouch Sensing for Flatscreens

ITS 2009, November 23 - 25, Banff, Canada, pp. 29-32. ()

2008

Collaborative problem solving on mobile hand-held devices and stationary multi-touch interfaces

PPD'08. Workshop on designing multi-touch interaction techniques for coupled public and private displays ()

Splitting the Scene Graph - Using Spatial Relationship Graphs Instead of Scene Graphs in Augmented Reality

3rd International Conference on Computer Graphics Theory and Applications (GRAPP '08) ()

Shadow Tracking on Multi-Touch Tables

AVI '08: 9th International Working Conference on Advanced Visual Interfaces, Naples, Italy, pp. 388-391. ()

A Multitouch Software Architecture

Proc. of the 5th Nordic Conference on Human-Computer Interaction: Using Bridges (NordiCHI 08), Lund, Schweden, pp. 463-466. ()

Tracking Mobile Phones on Interactive Tabletops

MEIS '08: Workshop on Mobile and Embedded Interactive Systems, Munich, Germany, pp. 285-290. ()

2007

Ubiquitous Tracking for Quickly Solving Multi-Sensor Calibration and Tracking Problems

Demonstration at the Fourth IEEE and ACM International Symposium on Mixed and Augmented Reality, Nara, Japan, Nov. 14 - 16, 2007 ()

Tracking for Distributed Mixed Reality Environments

IEEE VR 2007 Workshop on 'Trends and Issues in Tracking for Virtual Environments', Charlotte, NC, USA, Mar 11, 2007 ()

Design and Development of Virtual Patients

Vierter Workshop Virtuelle und Erweiterte Realität der GI-Fachgruppe VR/AR, Weimar, 15. Juli 2007 ()

3D Visualization and Exploration of Relationships and Constraints at the Example of Sudoku Games

Technical Report TUM-I0722, Nov. 1, 2007, pp. 1-8. ()

A System Architecture for Ubiquitous Tracking Environments

The Sixth IEEE and ACM International Symposium on Mixed and Augmented Reality, Nara, Japan, Nov. 13 - 16, 2007, pp. 211-214. ()

Hand tracking for enhanced gesture recognition on interactive multi-touch surfaces

Technical Report TUM-I0721, Nov. 1, 2007. ()

2006

Using Semantic Web Languages in Argumentation Models

Forschungsberichte Künstliche Intelligenz FKI-253-06, TU München, 2006 ()

2005

Interactive Simulation of Deformable Bodies on GPUs

Simulation and Visualisation Conference Proceedings, 2005 ()

2004

FixIt: An Approach towards Assisting Workers in Diagnosing Machine Malfunctions

Proc. of the International Workshop on Design and Engineering of Mixed Reality Systems - MIXER 2004, Funchal, Madeira, CEUR Workshop Proceedings ()

Realization of efficient mass-spring simulations on graphics hardware

Diploma Thesis, TU München, 2004 ()

2003

The Intelligent Welding Gun: Augmented Reality for Experimental Vehicle Construction

Chapter 17 in Virtual and Augmented Reality Applications in Manufacturing (VARAM), Ong S.K and Nee A.Y.C eds, Springer Verlag, 2003, pp 333-360. ()

Icons based on CC-BY work by Adrien Coquet and Pedro Lalli from the Noun Project.